Researchers from the University of Arizona, led by İbrahim Oğuz Çetinkaya, Sajad Khodadadian, and Taylan G. Topçu, have introduced a groundbreaking approach to mission engineering that leverages high-fidelity digital models and reinforcement learning. Their work, published in a recent study, addresses the evolving challenges of systems engineering (SE) as it shifts from designing monolithic systems to managing complex System of Systems (SoS). The study introduces an intelligent mission coordination methodology that integrates digital mission models with reinforcement learning (RL), specifically targeting adaptive task allocation and reconfiguration in dynamic and uncertain mission environments.

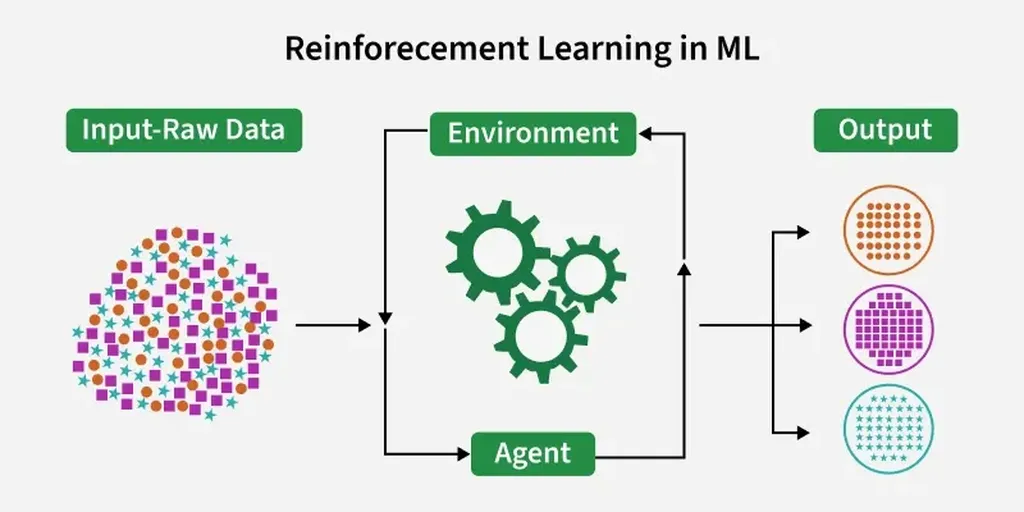

The researchers propose a framework that utilizes a Digital Engineering (DE)-based infrastructure. This infrastructure comprises a high-fidelity digital mission model and agent-based simulation. By formulating the mission tactics management problem as a Markov Decision Process (MDP), the team employs an RL agent trained via Proximal Policy Optimization. The simulation serves as a sandbox where system states are mapped to actions, refining the policy based on realized mission outcomes. This approach ensures that the mission coordinator can adapt to the dynamic nature of mission environments, thereby enhancing overall mission performance.

The utility of this RL-based intelligent mission coordinator is demonstrated through an aerial firefighting case study. The findings indicate that the RL-based coordinator not only surpasses baseline performance but also significantly reduces the variability in mission performance. This proof of concept illustrates that DE-enabled mission simulations combined with advanced analytical tools offer a mission-agnostic framework for improving mission engineering practices. The researchers suggest that this framework can be extended to more complicated fleet design and selection problems in the future, adopting a mission-first perspective.

This innovative approach highlights the potential of integrating high-fidelity digital models with reinforcement learning to address the complexities of modern mission environments. By leveraging advanced analytical tools, the researchers have demonstrated a significant improvement in mission performance and adaptability, paving the way for more resilient and effective mission engineering strategies. The study underscores the importance of embracing digital engineering and reinforcement learning to navigate the uncertainties and dynamics of contemporary mission landscapes. Read the original research paper here.