Vijay Janapa Reddi, a renowned researcher in machine learning systems, has introduced a groundbreaking curriculum called TinyTorch, designed to bridge the gap between machine learning algorithms and the systems that execute them. This innovative approach aims to equip students with the practical skills needed to debug, optimize, and deploy machine learning models effectively.

Machine learning education often focuses on teaching algorithms without delving into the underlying systems that make them work. Students learn about gradient descent, attention mechanisms, and optimizer theory, but they rarely gain insights into memory management, inference latency optimization, or the trade-offs involved in model deployment. This “algorithm-systems divide” results in practitioners who can train models but lack the ability to troubleshoot memory issues, optimize performance, or make informed deployment decisions—skills that are increasingly in demand in the industry.

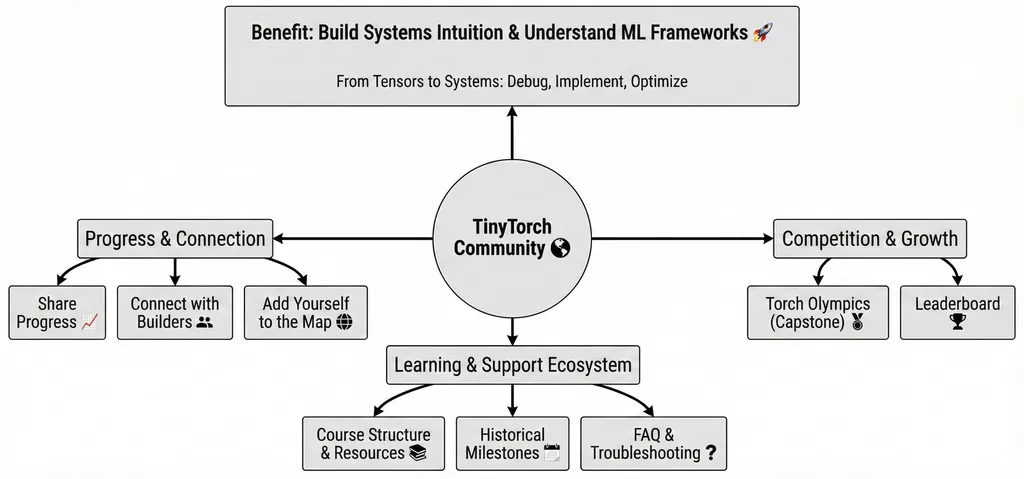

TinyTorch addresses this gap through an implementation-based systems pedagogy. The curriculum consists of 20 modules that guide students through constructing core components of PyTorch, such as tensors, autograd, optimizers, CNNs, and transformers, using pure Python. By building a complete framework from scratch, students gain a deep understanding of every operation they perform, as they are the ones who wrote the code. This hands-on approach ensures that students not only learn the theory but also gain practical experience in building and optimizing machine learning systems.

The curriculum employs three key patterns to facilitate learning. First, it uses “progressive disclosure” of complexity, gradually introducing more advanced concepts as students become more comfortable with the basics. Second, it integrates “systems-first profiling” from the very first module, ensuring that students are aware of performance metrics and optimization techniques from the outset. Finally, it includes “build-to-validate milestones,” where students recreate significant milestones in machine learning history, from the Perceptron (1958) to Transformers (2017), and even MLPerf-style benchmarking. This approach not only reinforces theoretical knowledge but also provides a tangible sense of achievement as students recreate and validate key breakthroughs in the field.

One of the standout features of TinyTorch is its accessibility. The curriculum requires only 4GB of RAM and no GPU, making it feasible for students to run the code on standard laptops without the need for specialized hardware. This democratizes access to high-quality machine learning education, ensuring that students from diverse backgrounds can benefit from the curriculum.

The TinyTorch curriculum is available open-source at mlsysbook.ai/tinytorch, providing educators and students with a valuable resource to enhance their understanding of machine learning systems. By bridging the algorithm-systems divide, TinyTorch equips the next generation of machine learning practitioners with the skills they need to excel in the industry, making it a significant contribution to the field of machine learning education. Read the original research paper here.